Computer Vision AI, Human Eye and Human Experience Design

Computer Vision AI

Machines can intelligently comprehend various forms of visuals, which are perceivable by the human eye and can provide deeper insights more quickly than what human brain, through human eye, can achieve. Any form of visual data, whether transformed into digital format through various input methods like different types of cameras (traditional, infrared, webcams), LiDAR, RADAR, various imaging technologies (thermal, satellite, multi and hyper spectral), depth sensors, etc., can be intelligently comprehended by computer vision artificial intelligence (CV-AI).

Computer Vision AI Usage

Throughout human evolution, from a time when there was no technology until today, vision (or eye sight) has remained a guiding star among our five senses, enabling humans to explore and understand the world. How, then, can we confine the boundless possibilities of CV-AI within mere limits?

Until now the CV-AI utilisation spectrum has encompassed security, healthcare, construction, automotive, manufacturing, logistics, agriculture, and continues to expand.

Every industry employing CV-AI has its own set of relevant use cases, varying in complexity and criticality. Among them, Automotive and Healthcare stand out with a high number of challenging typical and edge case scenarios.

Design and AI Companionship

We have been enabling computers (AI) to sense and comprehend better than we do ourselves. Should we also strive to perceive the world as AI senses and comprehends? Would that be truly legible for us and can we completely understand their perspectives, in their language?

In today’s scenario of human evolution — the answer is no; intuitively and naturally we cannot, as computers perceive/sense all inputs in data, for example in case of computer vision, AI perceives the object visuals by shapes, colours, textures, size and other visual features data in pixels.

“To bridge this gap of human vs machine perception and achieve comprehension equality between human and machine, we require human experience (HX) designed tools, products, and AI ecosystem”

HXD (a wholesome of human centric designs like product design, UX design, interaction design, visual design, ergonomics, cognition, inclusive design, product semantics +) creates an ecosystem that encompasses how humans see, how AI see, and ultimately, how humans see what AI’s see within the realm of computer vision AI.

Human Eye vs Computer Vision

The following are what I explored through a comparative analysis of the human eye’s function with some major steps involved in computer vision artificial intelligence (CV-AI)—

- How humans see vs how AI sees/comprehends (Sight Mechanism).

- How humans perceive (Brain) vs how AI perceives (ML).

- How humans perceive what AI perceives (Tools, Simulations, Applications).

- How AI actions are defined to respond to perceptions and understandings of diverse individual entities, entity combinations, various use-cases, and situations (autonomous/machine intelligence)

Above steps have many layers of subjects, users, products, tools, ML, simulations, intelligence, visualisation techniques and services. Human experience design (HXD) and CV-AV-AI tech companionship are critical in the CV-AV-AI-HXD ecosystem to ensure the success of CV-AI applications in AV.

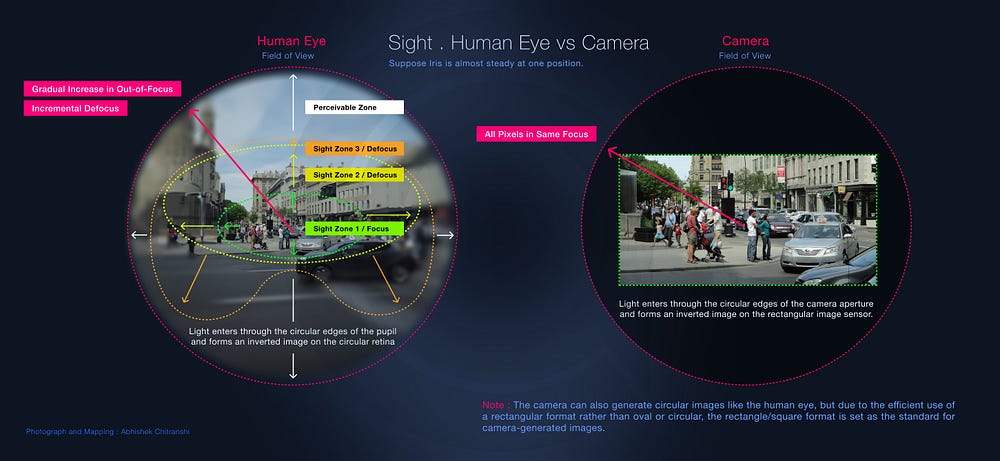

1. Sighting Entities : Human Eye Vs Camera

I explored comparative analysis between the human eyesight mechanism and the functionality of camera lenses. While I am aware of the diverse input methods utilised in machine learning (AI), such as processing information from stored entities scanning and live camera lens feeds, my current exploration is focused on comparing eyesight with the camera lens-sight mechanism as one example, aiming to establish a clearer connection between AI techs and human experience design (HXD).

The biological mechanism of eyesight in the field of biological science is not simple. I have mapped how the human eye and a camera receive inputs to capture the entities and combinations of entities present in a busy commercial area above.

- Both the pupil and the camera aperture allow light to pass through tiny circular entry points, and both of them produce images in circular forms only on their respective photoreceptors. Designed camera photosensitive pixels in grid arrangements (rectangular) allow only rectangular-shaped light to be received by the photoreceptors, while the remaining circular-shaped light is blocked.

- The human eye can achieve sharp focus only on a small area of its photoreceptor known as the fovea (sight zone 1 in the above image), while the rest of the circular image produced on the retina experiences incremental defocus. However, the human brain’s ability to perceive this defocused information depends on individuals’ prior learning, level of intelligence, and experiences. In contrast, designed camera mechanism is able to have sharp focus across the full area of its photoreceptor without experiencing any incremental defocus.

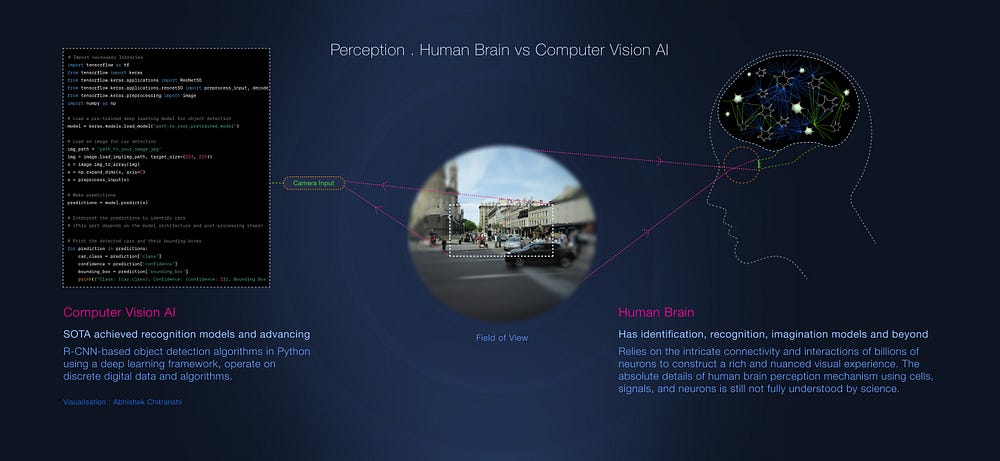

2. Perception of Entities : Human Brain Mostly Identifies Vs CV-AI Recognises

However human brain is super complicated and intricate in process, whereas the basics of entity perception has following differences,

- The human brain excels at identifying entities within its field of view, while Computer Vision AI primarily focuses on recognition. This distinction arises because CV-AI relies on machine learning models that undergo training to recognise entities based on their sensed features. Identification represents an advanced cognitive process that delves deeper into understanding entities beyond their superficial characteristics, as opposed to mere recognition based on sensed features. It involves the extraordinary capabilities of the human brain, including contextual understanding, drawing from past experiences, accumulated knowledge of behaviours, imagination, and more. While Computer Vision AI has the potential to evolve towards more sophisticated identification capabilities, the achievement of such technology is uncertain and would necessitate ongoing development in future.

- Brain identification relies on the intricate connectivity and interactions of billions of neurons to construct a rich and nuanced visual experience, whereas CV-AI employs R-CNN-based object detection algorithms in Python using a deep learning framework, operating on discrete digital data and algorithms. The exact mechanisms of human brain perception, involving cells, signals, and neurons, are still not fully understood by science.

3. How Humans Perceive AI’s Perception : AI development tools, simulations and products

This constitutes a pivotal aspect of AI and design collaboration, playing a significant role when humans seek to understand how CV-AI perceives and takes actions (both automated and manual) developed through tools, simulation, products in a simple, intuitive, and human-like manner. This underscores the importance of human experience design.

Autonomous Vehicle

For instance, when we consider the application of CV-AI in industries such as automotive and robotics, particularly in advanced areas like autonomous vehicles (CV-AV), the number of challenging and critical use cases, as well as edge cases, grows significantly.

To assess AI performance, including recognition accuracy and actions taken in accordance with predefined rules, we rely on AI development tools. These tools enable the development team to gain a deep understanding of how CV-AI perceives information at a granular level. This level of insight empowers the development team to experiment with AI actions, testing entity recognition across a wide range of complex use cases and edge cases encountered on roads and in traffic.

In the scenario of a commercial space with traffic as in image 4 below, how can a development team effectively test the AI system’s recognition accuracy and the driving actions executed by the autonomous vehicle (robotics) in situations characterised by a high degree of complexity, including numerous challenging use-cases and edge cases?

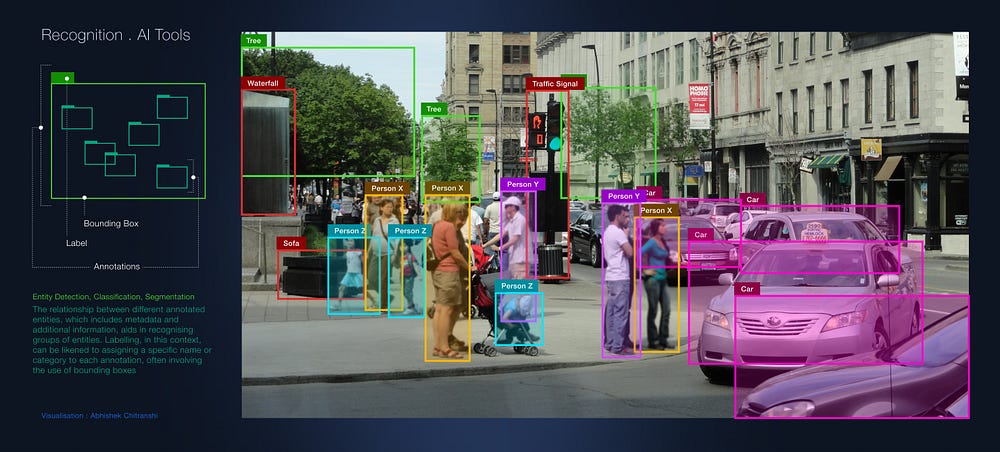

1. Recognition Accuracy

To assess entity recognition accuracy, dev team must translate CV-AI perception into a visual format, facilitating communication between dev team/stakeholders and between users/team-members and AI, enabling tracking, measurement, development and intuitive usage of the final products. To accomplish this, the technique of 2D & 3D bounding box annotation with labeled visualisation has been created for computer vision AI development tools enabling communication between dev teams/stakeholders and AI.

Does the bounding box technique include human experience design (HXD), and is it effective in addressing the complexities of all use cases?Here are some challenges and limitations that I observe in using this technique for above use-case (image 5) recognition accuracy,

- Overlapping Labels Legibility: Bounding box recognition functions effectively when the entities in view are well-separated, but does it remain intuitive when there are numerous entities in view that overlap?The labels of trees may become obscured when positioned behind traffic signal labels.

- Bounding Box Layering Based on Depth: Entities’ detection bounding boxes are layered according to their depth, determined by their distance from the CV-enabled camera. The overlap of entities within the view can potentially be managed through entity on/off filters and color changes, but many other challenges arise where analyzing the relationships between these entities requires them to be switched on within the filters.

- Misperception: There is a risk of incorrect recognition for certain objects in view. For example, there is a high likelihood that the water-curtain over a stone cladding could be misrecognised as a waterfall, and a street-side planter might be mistakenly identified as a sofa as in the image 4.

These are examples that highlight the absence of human experience design, leading to many such performance challenges in the efficient development of CV-AI for autonomous vehicles.

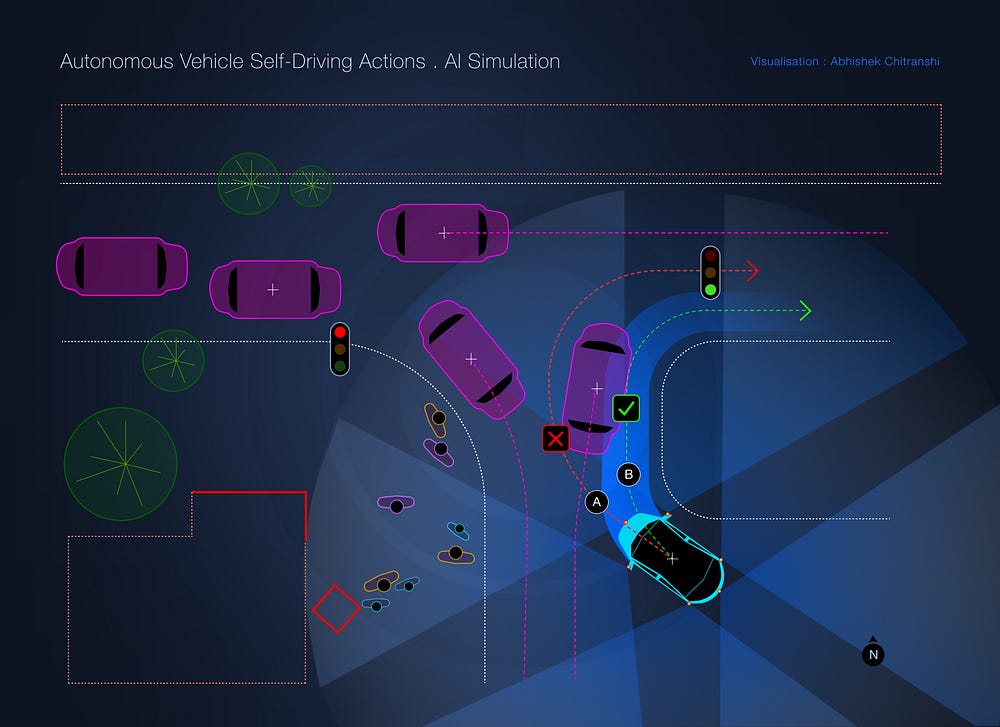

2. Self-Driving Actions : Simulation and Experiments

To assess the performance of CV-AI in autonomous vehicles, the evaluation involves creating top-view maps of all entities recognized through a 360º camera system (comprising six cameras mounted on the autonomous vehicle) and determining distance, speed, angles, and more using LiDAR and various sensor technologies within the vehicle. All recognitions should be accompanied by visualisations, enabling efficient interaction and experimentation to evaluate the accuracy of all defined rules governing CV-AI actions in complex use-case scenarios, as visualised in this example of a commercial area traffic signal (Image 5).

Autonomous Actions

Let’s consider a few examples of autonomous actions in the above given scenario. Since focus is on a specific autonomous vehicle action performance, let’s call it ‘Blu.’

- In this scenario, Blu recognises and perceives a total of 23 entities and combinations of entities. However, it makes two incorrect recognitions: it mistakes a water curtain (over a stone wall) for a waterfall and a street-side planter for a sofa. Fortunately, these errors do not pose a driving hazard as Blu remains on its designated track.

- Blu’s destination is set to the east, and the system calculates two possible tracks, namely Track A and Track B. After evaluating various factors, such as approaching vehicle speed, distance, angle, Blu’s own route, pedestrian body movement (to detect crossing intentions), and traffic signal status, the Blu’s system selects Track B, indicated by a green signal.

- To navigate along Track B, Blu adjusts its speed and turn angle, while carefully considering the calculated risk of collision and maintaining controlled situational collision avoidance.

These are just a few examples, and there are many more such intricate use-cases in various road and traffic conditions scenarios.

To effectively experiment, monitor and analyse all the above actions and more such, the system requires a human experience design approach to create an efficient ecosystem for CV-AI development. This ecosystem should encompass efficient experiments for the development team and provide them intuitive visual communication and information for passengers to ensure a safer and more confident ride inside the autonomous vehicle. Achieving these objectives necessitates a human experience design perspective.

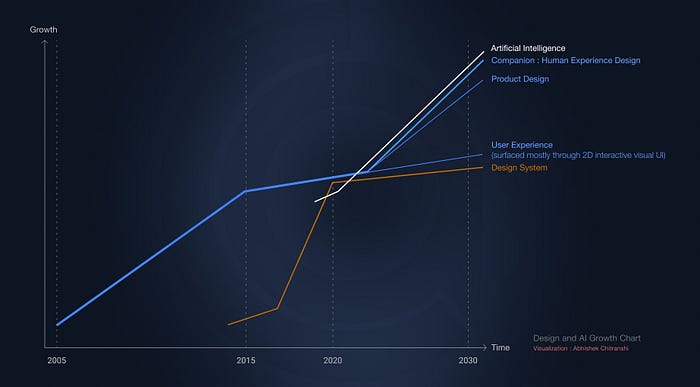

Growth of Design and AI Both

In the grand narrative of this article, the evolution of design vision unfolded, shifting from mere UX and product design to the profound realm of Human Experience Design (HXD), a new companion design discipline in the symphony of AI.

…